Robots That Get it Wrong

To err is human -- but machines can do it, too. Sandra Okita believes we can learn from their mistakes

To err is human—but machines can do it, too. Sandra Okita believes we can learn from their mistakes

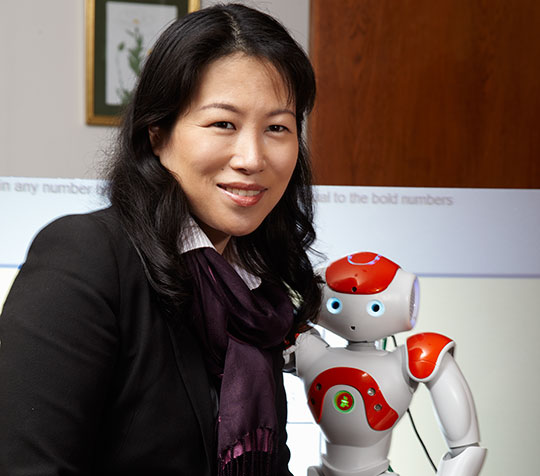

On a recent afternoon in TC’s Humaan 18-inch, white plastic robot named Projo was staring, with lightbulb eyes, at some math problems on a computer screen.

“Hi, Projo, how’s it going?” Sandra Okita, Assistant Professor of Technology and Education, asked the robot casually. “We’re going to be math buddies today.”

“What happens if I get something wrong?” Projo asked in a wispy, plaintive voice. “I don’t want to make mistakes.”

As it turned out, Projo (short for Projective Agent) was able to solve the problems, and he drew a plastic hand across his forehead in relief when Okita praised him for a job well done. But in many ways, his potential fallibility was the point. Okita and her students write programs for robots and avatars—virtual characters animated by humans through computers —to help small groups of learners (mostly elementary- and middle-school students) practice sets of problems in English, math, biology and other subjects. On the one hand, the artificial critters can assume the efficient, machine-like role you might expect, monitoring kids as they progress through their lessons and tracking the problems they get wrong, prompting repetitions with the patience that perhaps only a machine could summon. But on the other hand, the robots and avatars that Okita designs can also make the same mistakes students make—a highly valuable service that reflects Okita’s belief that learning, at its best, is a social enterprise.

“The key phrase across all my projects is ‘peer learning,’” Okita says. “Peer learners either teach us something we didn’t know or mirror back to us something about ourselves. Sometimes the peer is human, sometimes it is an agent or avatar or a computer graphics character that resides inside a computer. Sometimes it’s a physical robot.”

Why use technology to approximate human roles? For starters, as demonstrated by Okita and two collaborators from Stanford University—TC alumnus Daniel Schwartz of Stanford’s School of Education (see story) and Jeremy Bailenson of its Department of Communications—children love working with nonhuman peer learners. Kids identify with robots and virtual characters that they create or customize, and they make imaginative leaps when working with them. Peer learners also generate data that teachers can analyze to see which problems are the most difficult for individual children or an entire class.

“Projo has an assessment side to him and also helps kids self-correct their math calculations,” Okita says.

Then, too, she adds, human peers can’t always be available and may not be at the ideal learning level—slightly, but not too far, ahead of the student—to be of maximum benefit. And while human peers or teachers can do their best to make information accessible, they may lack the time or expertise to verify that the student has actually learned it.

One powerful technique for making such a determination is by behaving humanly—specifically, by replicating a student’s own mistakes. Imagine a sixth grader who learns a certain math concept at school, Okita says, under the guidance of a teacher. He logs in later at home and, with the help of a personalized computer avatar (which he may have designed and named, or downloaded from the Internet and customized), he reviews the day’s math problems and takes a quiz. All of this is good practice and will help strengthen existing skills. But now comes the twist: The avatar, too, can take the quiz, make the same mistakes that the student is making, and provide the student with an opportunity to make corrections. It is a variation on the old adage that the best way to learn something is to teach it to someone else.

“I really believe that an influential educational technology isn’t the kind that’s infallible, but instead, the kind that is most human,” Okita says.

The concept of using robots and computers in educational settings began in the late 1970s, triggered in part by the endearing androids of Star Wars, R2-D2 and C-3PO, which spawned an entire industry of robotic toys and educational games for children. At that time, robotic science was already evolving as a higher-education discipline in which engineers and computer scientists pursued the development of increasingly intelligent, sophisticated robots and avatars, mostly for manufacturing and military use. In subsequent campaigns reminiscent of the United States’ Sputnik-era competition with the Soviet Union, private businesses began sponsoring contests to encourage children to learn to build and program robots.

Still, robotics remained the domain of the physical sciences until the 1990s, when cognitive scientists started to explore how humans interact with robots. That line of inquiry heated up when avatars entered the educational and recreational computer-game scene. At first they were off-the-shelf, online figures that could be downloaded, “claimed” and sometimes named by a gamer who controlled them anonymously in cyberspace. But by the early 2000s, gamers could create their own avatars as alter egos and control them, still anonymously, in online games and virtual communities. Psychologists and educators became interested in avatars as representations of a person’s identity, fantasies and ego, and robotics and computer science began to attract researchers like Okita, who holds a doctorate in educational psychology.

Around 2005, Okita began to add her voice to the academic study of human-robot interaction. Previous computer research had focused on giving robots superhuman memory and infallible computational skills, and on imbuing avatars with extraordinary physical prowess. Building on the premise that peer learning can be the best way to teach and absorb knowledge, Okita and a small number of education researchers began asking whether students would do better with a robot that behaved more like a human. Would the robot be more effective, they asked, if it maintained eye contact for a longer period? If it mimicked human gestures or voices more closely? If it actually made a mistake?

When Okita joined TC’s Mathematics, Science and Technology Department in 2008, John Black, the Cleveland E. Dodge Professor of Telecommunications and Education and Chair of the Department of Human Development, was pursuing a very similar line of inquiry. Black’s research focuses on grounded, or embodied, cognition and the theory that learning is most successful when it is reinforced or even precipitated by a physical experience—or, as Black puts it, when the learner is able to “create both a mental and perceptual simulation of a concept or process” (see story, page 48).

“Simulation” is the key word here, because it implies comparison and the reconciliation of difference, as with someone fitting a shape to a preexisting space. For example, using technologies based on the concept of grounded cognition, a dancer or athlete might digitally capture his or her own movement, then overlay it against a move perfectly executed by an on-screen avatar to compare the two. Or a learner could execute physical gestures that direct an avatar to do something, such as stack and count a group of blocks on a screen, and then observe how well those directions play out. In both cases, the avatar acts as a peer learner, and the gestures by which the student moves the avatar serve as a physical reminder of the process.

Okita is studying which virtual peer learners, programmed to be humanly imperfect, are the most helpful in encouraging grounded cognition, and she also is teaching educators how to use them. In Tokyo last summer, she worked at the Honda Research Institute, designing and testing robots for educational settings.

“Technology and robots present an array of interesting design choices when modeling interactions with human learners,” she says. “Isolating features through empirical studies can help us examine the cause-and-effect relationship, which can help in designing interventions that contribute to human learning and behavior.”

One important finding, made by other researchers and emphatically corroborated by Okita, is that it is not good for a robot or avatar to be too human. Researchers describe a phenomenon they call “the uncanny valley,” in which a robot mimics human behavior or appearance so perfectly that people of all ages become uncomfortable and children become afraid. Hence the importance, particularly with young children, of leaving room for the imagination by designing robots and avatars with cartoonlike or exaggeratedly robotic features.

In addition to their work with robots, Okita and her students in the Instructional Technology program (who are also studying with Charles Kinzer, Professor of Education) are designing instructional avatars for the Second Life virtual world and testing them with children. The aim is to discover which avatar and robot features, and what level of customization, are best for young learners. The team initially tested the effectiveness of learning from the teaching of a human peer. Then they tested how students fared with virtual peer learners. The next step, Okita says, is to see how well humanoid robots like Projo perform as peer learners for students.

Ultimately, the goal is to find what Okita calls “the sweet spot” in technological design in order to determine how finely one can calibrate the responses of avatars and robots to human commands, and how humanlike to make them in order to be most effective while avoiding the uncanny valley. Currently Okita is looking for funding to support this research.

Of her students, Okita says, “We’d like to develop the next generation of robot programmers, to learn how to design human-to-robot interactions.” She believes that robotics can be used as an effective and exciting vehicle to get elementary- and middle-school students interested in the type of work she herself is doing.

As excited as she is by what technology can do in encouraging learning, Okita keeps its power in perspective. She is first an educator and researcher for whom learning is paramount, and it is learning—not technology—that drives her work. “The curriculum should always be at the center of whatever technology you use,” she says. “Technology should support your curriculum—not the other way around.” tcn Robot Interaction And Learning Laboratory,

Published Wednesday, May. 2, 2012